The Night Silence Shattered

At 2:30 AM communities near Korea University , I was jolted awake by a woman’s piercing scream. I’m usually a heavy sleeper, so at first, I tried to convince myself it was just stray cats fighting in the alley. “Maybe it’s just another nightmare,” I thought, trying to drift back to sleep.

But then, the words “Save me!” (살려달라) cut through the air. It was no dream.

I threw open the window. My neighborhood is truly residential areas near Korea University, but it’s far from isolated—it’s right next to an eight-lane highway and a police station. As I listened, the sounds became more disturbing: the unmistakable noise of someone being strangled and gasping for air. Terrified and now wide awake, I called the police immediately. I told them it was a critical situation, likely domestic violence. But when they asked for the exact location, I froze. Having just woken up, I couldn’t pinpoint exactly where in the maze of buildings the sound was coming from.

Into the Dark Alley

I couldn’t just sit there. I threw on my shoes and headed out. I don’t know how the heck I can run down fast near the source of sounds. I knew I couldn’t fight a criminal, but I could at least find the source so the police wouldn’t waste time.

Surprisingly, finding the source was easy. The screams were coming from the third floor of a motel right by the main road. The closer I got, the more horrific it became. I heard the thuds of someone being hit, the sound of things breaking, and more of that haunting, stifled gasping. In those few minutes, I heard her beg for her life at least twenty times.

4 Minutes to Rescue

Fortunately, the response was fast. Within four minutes of my call, three police cars and a fire truck arrived. I pointed out the exact window. Five firefighters broke down the door with their gear, while six officers and detectives rushed in. They caught the man on the spot.

The Arrest and a Disturbing Reality

The perpetrators were identified as two foreign nationals—one reportedly a laborer and the other a self-proclaimed university student. They were arrested on the spot and taken away in a patrol car. As the first person to report the crime and a key witness, I stayed behind to answer a few questions from the police.

Fortunately, I didn’t have to witness the full extent of the victim’s physical state, as she was being attended to privately by a female officer in the ambulance. However, the details I gathered from a nearby detective were haunting. According to the victim, she had been subjected to indiscriminate violence since 2:00 AM by a man she was dating. She desperately tried to reach her phone to call for help, but it was out of reach, leaving her trapped in a nightmare.

What truly shocked me was this: she had been screaming for nearly 40 minutes before I made the call. In the heart of Seoul—a place with constant foot traffic and right near a police station—not a single neighbor or passerby had reported it. It made me realize that if the attacker had intended to kill, it could have ended in a silent tragedy before anyone even picked up the phone.

The Vulnerability of an Outsider

This incident also made me reflect on the vulnerability of foreigners. As a foreign national, the victim likely lacked the local knowledge that residents take for granted—knowing which areas are safe or how to navigate the local system during a crisis. If I were staying in a roadside motel in Ohio and found myself in danger, local residents would intuitively know whether that area was safe and how to react. For a stranger in a foreign land, however, that situational awareness is absent, making them an even easier target for crime.

Seeking Help: The Three Pillars of Intervention

This night changed how I view personal safety. Sometimes, we find ourselves in situations where we are desperate for help but unable to reach for it. So, what is the best way to ask for assistance? Ideally, the most effective way to seek help is to directly ask someone who is trustworthy, immediate, and capable. You need someone who won’t ignore you, who can act instantly, and who has the professional means to help. Generally, public authorities like the police or firefighters fulfill these three criteria. But as this case proved, there are moments when reaching out to them directly is simply impossible.

Going back to the beginning, the only reason I was able to help that night was because of the scream. Screaming is our most fundamental, primal reaction to danger. We scream when we are startled, when we are terrified, and when we are in pain. It is a natural physiological response that is almost impossible to suppress in extreme fear. In a world where technology can be taken away and neighbors might stay silent, the human voice remains our most raw and effective tool for survival. If we can find a way to ensure that a simple scream can bridge the gap to those who can help, it might just be the most powerful lifeline we have.

I began to wonder: Isn’t there an app for this? After some digging, I found an Android app called “Chilla,” developed by an Indian developer in 2015. Its concept was simple: it detected danger through a physical button press or a scream, then sent an SMS to the police or pre-designated contacts. However, because the app was so old, I couldn’t test how accurately it actually detected screams or if it even functioned today.

Driven by curiosity, I tracked down the developer, Mr. Kishlay Raj, on LinkedIn. I reached out to him with two specific questions: (1) Did he use deep learning for detection? and (2) Why is the app no longer active? To my gratitude, Mr. Raj kindly shared his insights:

OS Obstacles: As Android evolved, keeping an app running in the background became increasingly difficult. Some manufacturers even modified the OS kernel to kill background processes to save battery. Ultimately, balancing a full-time job with the demands of maintaining such a complex app became unsustainable.

Technical Limitations: Back then, deep learning wasn’t mainstream. He used sound frequency and volume to detect screams, employing a “sliding window” technique to minimize false positives (Type I and II errors).

I looked for similar apps in Korea, but they all followed a similar pattern: the user had to press a specific physical button (like power or volume) multiple times to trigger an alert. From a developer’s perspective, sending an SMS based on a button press is a simple architecture. However, as we saw in the motel incident, reaching for a button is often an impossible luxury in a real crisis. If a victim can reach their phone to press a button, they might as well call 112 or 911 directly. Current solutions simply aren’t intuitive enough for a high-stress, life-threatening situation.

The Challenge: Defining a “Scream”

I decided to pursue a more direct solution: a system that detects help through screams alone. But this raised a fundamental question: What exactly is a “scream”? I searched for academic papers, but I couldn’t find a definitive study that characterizes the specific frequency or pitch range of a human scream. Without a clear definition, how could I build a detector?

One “brute-force” idea was to record audio in real-time, split it into chunks, and send it to a ChatGPT-style API for detection. But this approach is fundamentally flawed: it poses massive privacy risks, incurs high data costs, and the API latency would be too slow for an emergency.

My Vision for a Better Solution

Despite these hurdles, this thought experiment helped me define the core requirements for a truly useful solution:

Simplicity and Intuition: The maintenance must be straightforward, and the method of seeking help must be as instinctive as the scream itself.

On-Device Processing: To protect privacy and ensure speed, the audio must never be stored as a file or sent to an external server. All detection must happen locally on the device.

블로그의 후반부 내용은 기술적 구현을 넘어, **’신뢰성(Reliability)’과 ‘오경보(False Alarm)’**라는 시스템 설계의 핵심적인 철학을 다루고 있네요. 이 부분은 독자들에게 시스템의 필요성을 설득하는 매우 중요한 구간입니다.

원문의 흐름을 살려, 고민의 깊이가 느껴지는 영문 버전으로 정리해 드립니다.

The Fundamental Dilemma: Is Every Scream a Cry for Help?

The Problem of Context and Categorization

As I delved deeper, I encountered a more fundamental question: How can a system distinguish between a desperate cry for help and a scream of excitement?

My entire premise rests on the logic that “A scream = A need for help.” However, in reality, there are countless counterexamples. People scream at rock concerts when they see their favorite idols, and they scream in joy or during play. If the system cannot classify these sounds accurately, the alerts lose their credibility.

Learning from “Alert Fatigue”

We’ve already seen the consequences of unreliable alerts during the COVID-19 pandemic. At first, every emergency text caused panic. But as they became frequent and repetitive, people grew desensitized, eventually ignoring or even disabling the notifications.

To reiterate, for a help-seeking system to work, it must fulfill three pillars: (1) It must be trustworthy, (2) it must provide appropriate aid, and (3) it must be immediate. Frequent false alarms undermine the perceived utility of the system. If the recipient starts to doubt the urgency of the alert, the “immediate” response vanishes.

In Search of a Unique Signature

The core challenge then becomes: Is a “danger scream” acoustically distinct? Does it have unique features that separate it from a joyful shout? Finding an answer to this was incredibly difficult. I spent hours scouring research papers and even consulted ChatGPT to see if there were defined acoustic markers—such as specific frequency modulations or “roughness”—that characterize human distress. If I couldn’t define the physical properties of a “real” scream, I couldn’t expect a machine to learn it.

| Links | Contents |

| https://www.sciencedaily.com/releases/2021/04/210413144922.htm | Neurocognitive processing efficiency for discriminating human non-alarm rather than alarm scream calls, Sascha Frühholz, |

| https://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.3000751 | |

| https://www.dailymail.co.uk/sciencetech/article-9437799/Most-people-tell-difference-screams-joy-fear-sound-similar.html | |

| https://www.technologynetworks.com/neuroscience/news/joyful-screams-processed-by-the-brain-faster-than-screams-of-fear-or-anger-347704 | Joyful Screams Processed by the Brain Faster than Screams of Fear or Anger |

| https://neurosciencenews.com/scream-emotions-18223/ | Human Screams Communicate At Least Six Emotions |

| https://scitechdaily.com/human-screams-communicate-at-least-six-emotions-surprisingly-acoustically-diverse/ | |

The Paradox: Machine Logic vs. Human Perception

A Meaningful Difference

After extensive research, I reached a definitive conclusion: there is a scientifically significant difference between various types of screams. The acoustic properties are distinct enough that, in theory, a model can be trained to categorize them accurately.

The Human Limitation

However, here lies the paradox. As several research papers have pointed out, even if the acoustic attributes are different enough for a machine to distinguish, the human ear cannot. To us, a scream of pure ecstasy and a scream of sheer terror often sound identical. We lack the biological “sensors” to process the subtle frequency modulations that separate joy from fear.

The Labeling Nightmare

This created a massive hurdle for my project. If I were to collect scream samples and label them for deep learning, how could I ensure the labels were correct? If a human can’t reliably tell whether a recorded clip is a shout of joy or a cry for help, then the manual labeling process becomes a gamble. I couldn’t simply guess; the integrity of the data would be compromised.

In Pursuit of the Experts

I wasn’t ready to give up. To solve this dilemma, I deep-dived into the methodologies of existing studies to understand how researchers overcame this “perceptual gap.” I wanted to know how they validated their data and what ground truths they used. Driven by these questions, I reached out to the very professors who conducted these studies, sending them emails to ask for their guidance on data labeling and acoustic validation.

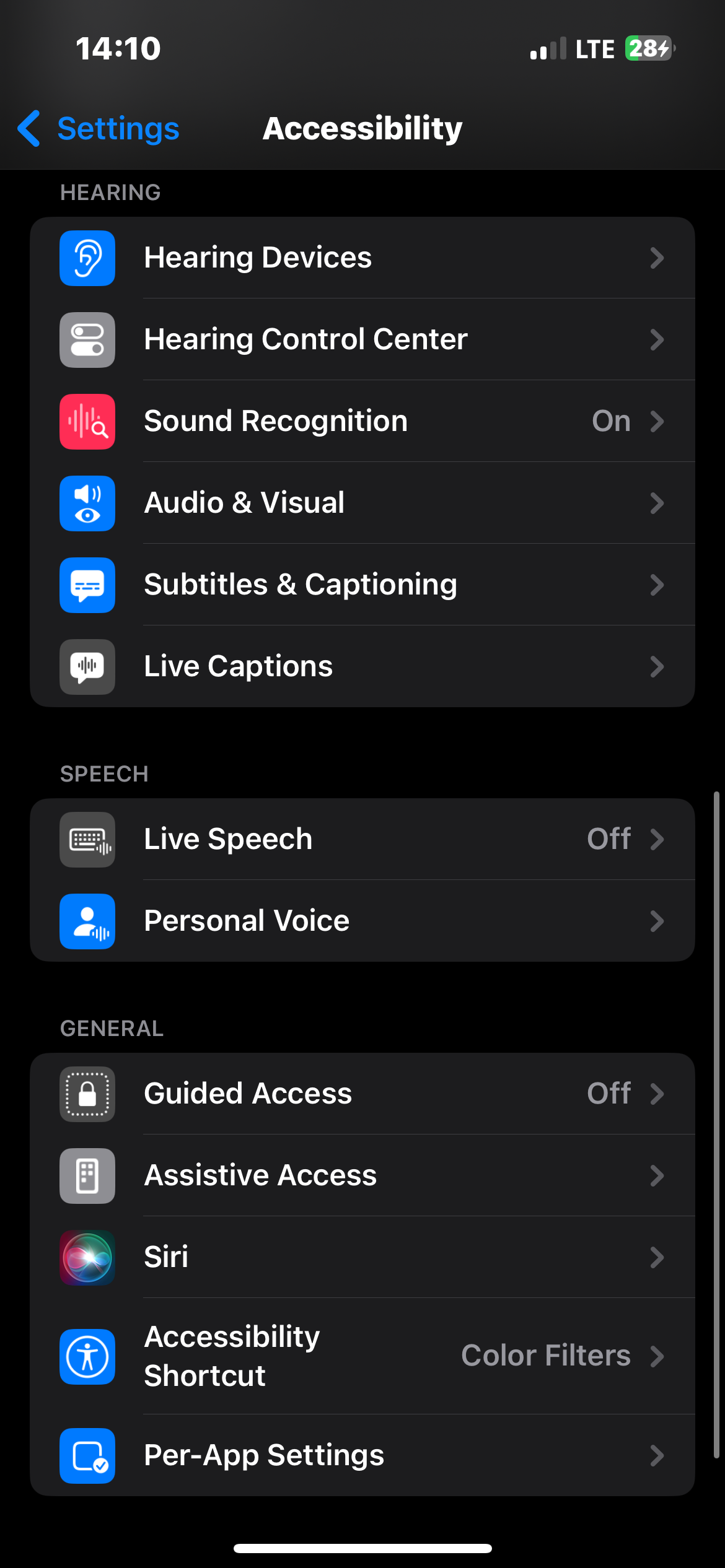

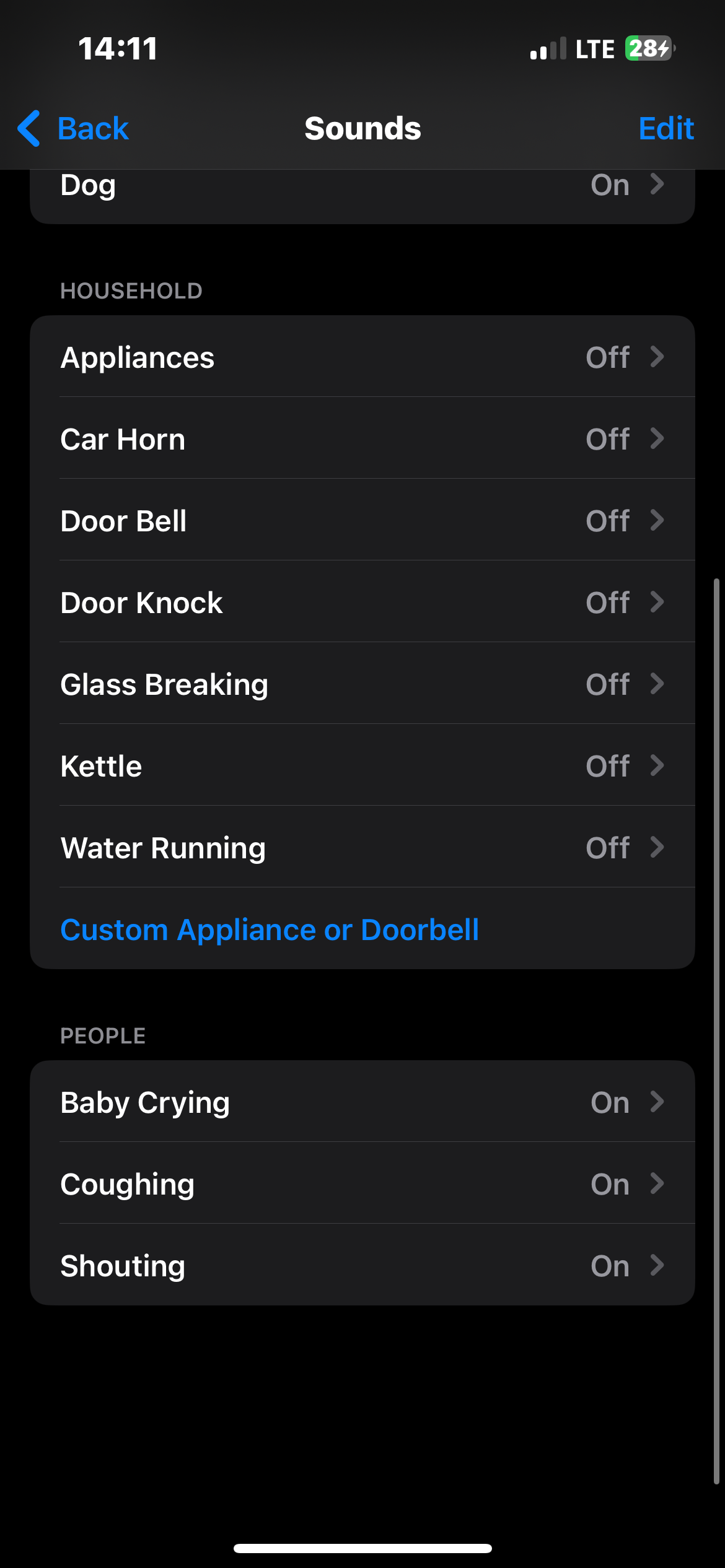

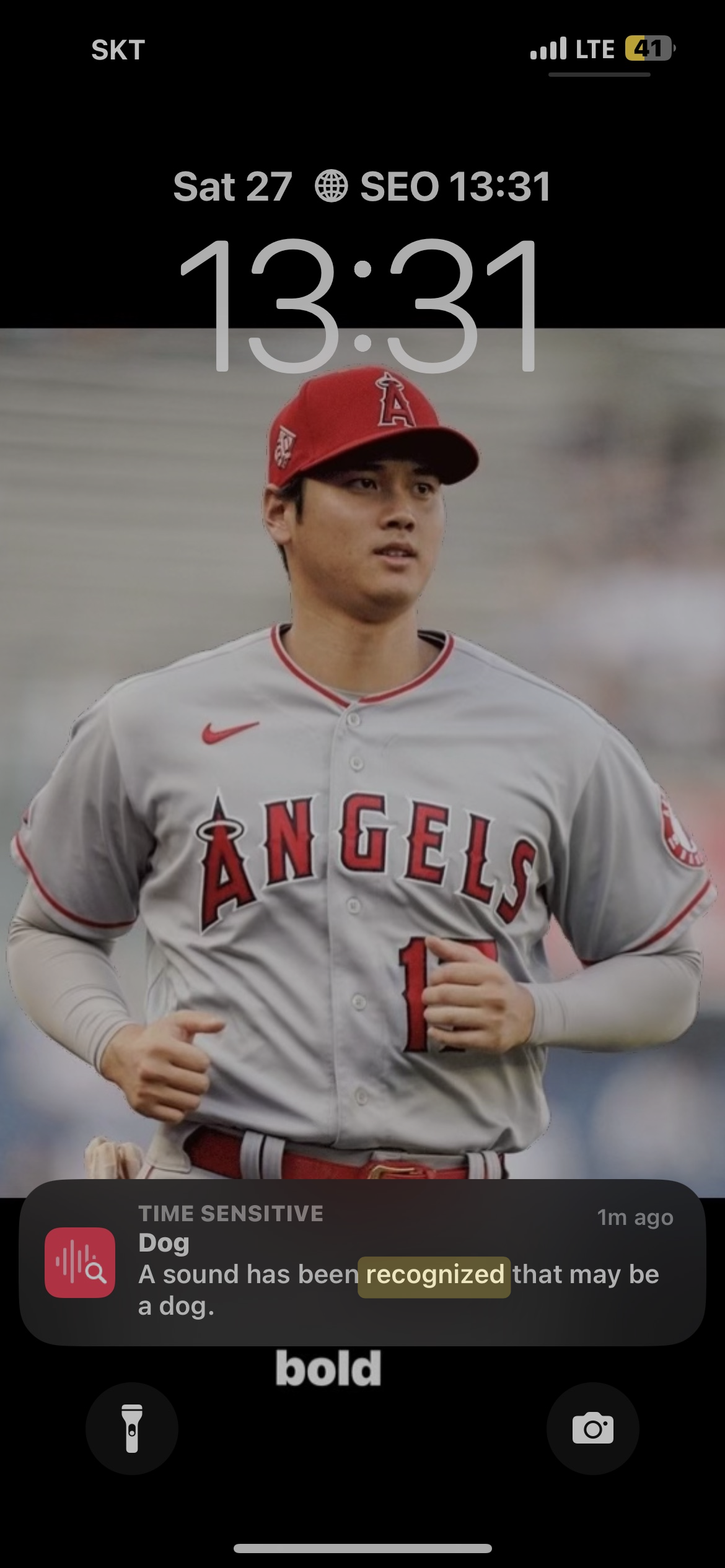

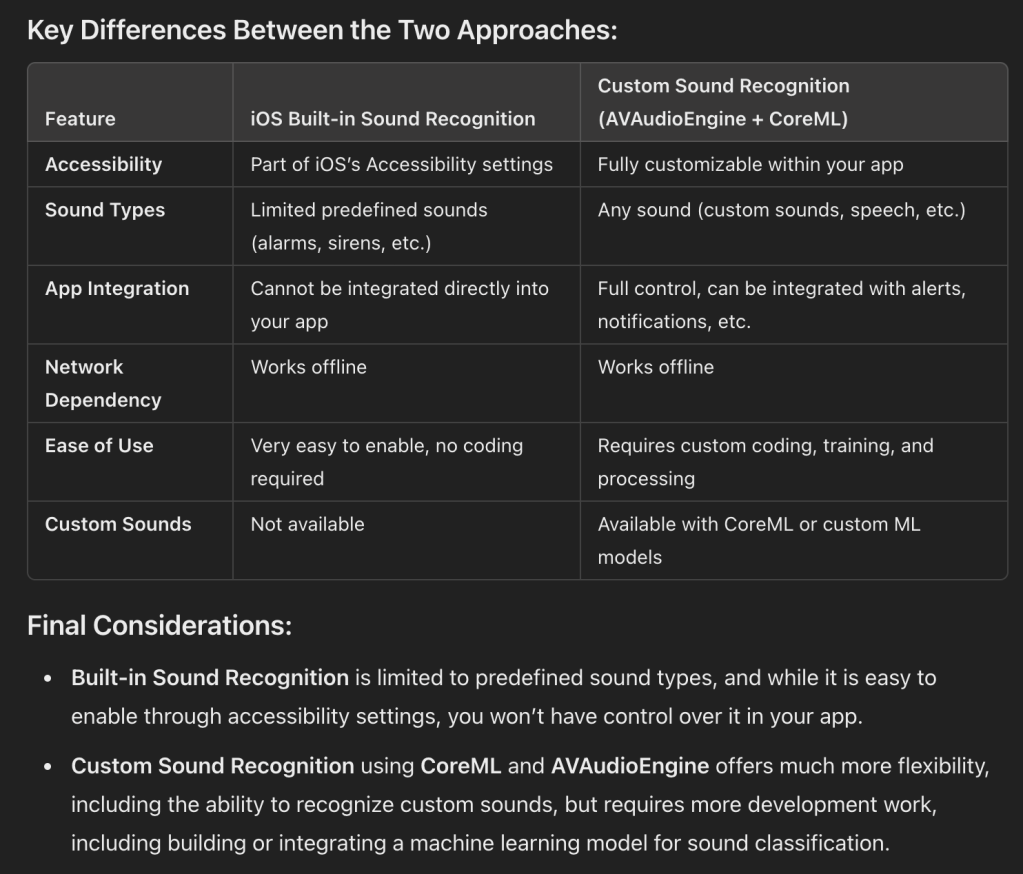

이와 별개로 관련 기능을 어떻게 구현할지를 고민하던 중, 어느 날 아이폰의 음성인식(sound recognition) 기능이 이미 존재함을 발견하게 되었다. 검색을 해본 결과, iOS 14에서부터 도입이 된 기능인데, 주변 소리를 감지하고 만약에 해당 소리가 있다면, 주변 소리를 감지하고 그 소리가 주변에 있었다고 알림을 주는 방식이었다. 심지어 애플에서 아이폰 기기의 펌웨어와 결부되어 제공되는 기능이므로 당연히 외부로 소리가 유출될 가능성도 없었다. 그래서, 1주일간 해당 기능을 설정하고 실제로 경험한 바로는 정확도가 그다지 높지는 못했다.

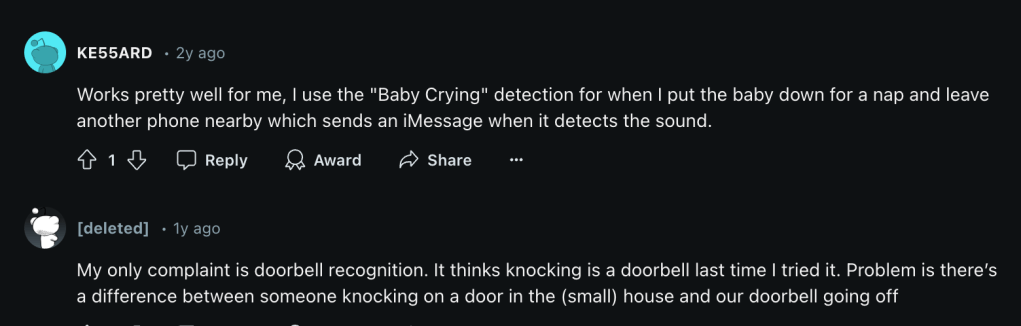

레딧에서도 좋다고 호평하는 사람들도 있고, 불평하는 사람들도 있었다. 호평하는 사람들은 꽤나 강아지 소리나 고함소리를 잘 감지한다는 주장이었고, 불평하는 사람들은 이 기능이 배터리 소모도 심할 뿐더러 소리를 잘 감지하지 못한다고 비판하였다. 그래도, 해당 운영체제에서 제공하는 기능을 잘 활용한다면 충분히 활용도가 높을 것이라고 판단했다.예를 들어, 만약 해당 기능이 API형태로 제공된다면, 내가 만든 서드파티 앱과 해당 기능을 교차검증해서 정확도를 높일 수도 있을 것이다. 예를 들어, 비명소리를 감지하였고, iOS 자체 기능으로도 고함소리를 감지하였다면, 실제로 그 상황이 위험할 가능성이 높을 것이다.

안타깝게도, 애플에서는 해당 기능을 API로 제공하지 않고 있었다. 따라서, 해당 기능을 현재로서는 활용할 수가 없었고, 자체적으로 해당 기능을 트레이닝시키고 개발해야한다는 결론에 이르렀다.

Leave a comment